#12: Understanding Tokenization in LLMs

In earlier posts, we explored how large language models (LLMs) work at a high level, followed by a deeper dive into how data is collected for training these models. Today, we’ll focus on an essential concept in natural language processing (NLP): tokenization.

What Are Tokens

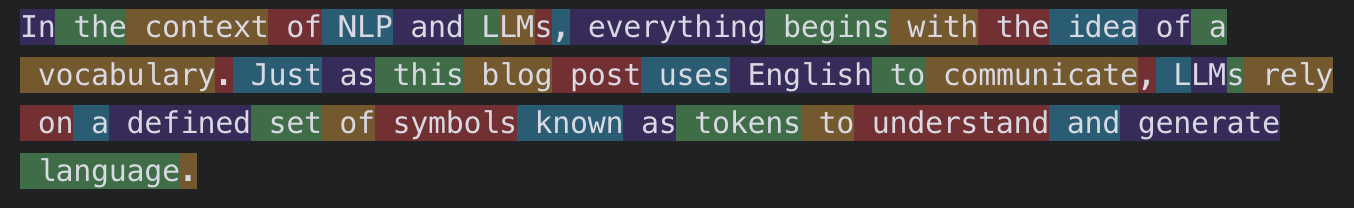

In the context of NLP and LLMs, everything begins with the idea of a vocabulary. Just as this blog post uses English to communicate, LLMs rely on a defined set of symbols known as tokens to understand and generate language. The image at the top of this post used OpenAI's tokenizer tool to split the above line into tokens.

Tokens are the fundamental building blocks of language for LLMs. A model’s vocabulary is simply the collection of all the tokens it has learned to recognize.

Why Tokenization Matters

LLMs don’t understand text the way humans do — they need numerical input to process language. Tokenization bridges this gap, converting human-readable text into a format the model can work with.

Without tokenization, LLMs would struggle to:

Process text efficiently: Working with raw text is computationally expensive.

Manage vocabulary size: A vocabulary with millions of unique words would be inefficient to train and use.

Handle unseen words (OOV - Out of Vocabulary): Tokenization enables the model to deal with new or rare words by breaking them into familiar components. For example, Adswlejaroifsduhgvuodfljskfasf could be broken into multiple smaller tokens.

Types of Tokenization

Different approaches to tokenization come with trade-offs. Let’s take a look:

Word-Level Tokenization

Splits text into individual words.

Example: "Hello, world!" → ["Hello", ",", "world", "!"]

Pros: Simple to implement.

Cons: Large vocabulary size, struggles with unseen or made-up words (e.g., "hungryish").

Character-Level Tokenization

Splits text into individual characters.

Example: "Hello" → ["H", "e", "l", "l", "o"]

Pros: Tiny vocabulary, great for handling unseen words.

Cons: Long input sequences, loss of word-level meaning, and INSANELY computationally expensive.

Subword Tokenization (e.g., Byte Pair Encoding)

A hybrid approach that balances the benefits of word and character tokenization.

This is the method used by models like ChatGPT and Google Gemini.

The most common subword tokenization method is Byte Pair Encoding (BPE), which merges the most frequent character or subword pairs.

Example: "lowest" → ["low", "est"]

Pros: Compact vocabulary, effective with OOV words, preserves partial word meaning.

Cons: More complex to implement.

Visualizing Tokenization

Let’s walk through how different tokenization strategies handle the sentence: "The unbelievable performance was breathtaking."

Word-Level: ["The", "unbelievable", "performance", "was", "breathtaking"]

Character-Level: ["T", "h", "e", " ", "u", ..., "g"]

Subword (BPE): ["The", "un", "believe", "able", "performance", "was", "breath", "taking"]

As shown above, subword tokenization captures meaningful fragments while maintaining flexibility. This helps the model understand and generalize language more effectively.

OpenAI’s models, including GPT-4o and GPT-4o-mini, use a vocabulary of approximately 100,000 tokens, built using a method called Byte Pair Encoding (BPE). This vocabulary includes a wide variety of token types — not just full words. For example, common words like "the" or "apple" may be individual tokens, while longer or less frequent words like "capitalization" might be broken into subword tokens such as "capital", "ization". The vocabulary also includes punctuation marks (e.g., ".", "!"), special characters (like "@user" or emojis 😊), and even individual letters or character fragments for handling rare or non-standard text (like foreign characters or typos). This flexible token design helps the model handle a wide range of language inputs efficiently while keeping the vocabulary size manageable.

Try OpenAI’s tokenizer tool to experiment with how different sentences are split into tokens. Another great tool I like to use is tiktokenizer.

Code Example (using Hugging Face Library)

Here's a simple Python code example using the Hugging Face Transformers library to demonstrate subword tokenization with the BERT tokenizer:

Tokenization During Inference

When you input a prompt to an LLM like ChatGPT, the following steps happen:

Tokenization: Your prompt is broken into tokens.

Embedding: Each token is mapped to a numerical vector that captures its meaning.

Processing: These embeddings are passed through the model’s layers to compute contextual meaning.

Prediction: The model predicts the next token based on context.

Detokenization: The generated tokens are stitched back into readable text.

This pipeline starts with tokenization — without it, nothing else would be possible.

Conclusion

Tokenization is a foundational step in the language modeling process. It allows LLMs to break down language into manageable units, helping them process text efficiently, deal with unseen words, and generate coherent responses.

Understanding tokenization helps us better appreciate what goes on behind the scenes when you chat with a model like ChatGPT. In the next post, we’ll explore embeddings — the next key step in transforming text into meaning.