#15: Teaching LLMs to Align with Us: Supervised Fine-Tuning and RLHF

We’ve seen how large language models (LLMs) are pretrained on vast amounts of data and how they generate text one token at a time during inference. But if we stopped there, these models would often give factually incorrect, biased, or just plain weird answers.

So how do we get models like ChatGPT to be helpful, harmless, and honest?

That’s where Supervised Fine-Tuning (SFT) and Reinforcement Learning from Human Feedback (RLHF) come in.

Why Pretraining Isn't Enough

Pretraining teaches the model to predict the next word using data from the internet. But internet data is messy, being full of contradictions, toxic content, and outdated facts.

A model trained only on this data might:

Repeat misinformation

Respond rudely

Make up answers

To fix this, we teach the model how to behave more like a helpful assistant through fine-tuning and human feedback.

Supervised Fine-Tuning

This is the first step in training the model to follow human values. After pretraining, we take the base model and train it on high-quality input-output pairs written by humans.

For example:

While supervised fine-tuning improves model behavior, it still comes with important limitations:

The model only learns from the examples it's shown — it doesn't generalize beyond them well.

It treats all responses in the dataset equally, without understanding which ones are better or worse.

It can still generate responses that are overly verbose, unclear, or not very helpful.

This brings us to reinforcement learning.

Reinforcement Learning from Human Feedback (RLHF)

If Fine Tuning is like teaching with flashcards, RLHF is like coaching. We let the model try something, evaluate it, and give feedback to improve future performance.

Here’s how it works:

Generate multiple responses

The Fine tuned model is asked to generate several replies to the same prompt.

Humans rank the responses

Labelers review these options and rank them from best to worst. The model can then learn how to respond and how to avoid responding.

Train a reward model

A separate model is trained using these rankings to predict which responses humans prefer. This reward model learns to score new responses based on how well they match human preferences.

Fine-tune the model with reinforcement learning

The base model is then optimized to maximize scores from the reward model.

RLHF Doesn’t End at Training

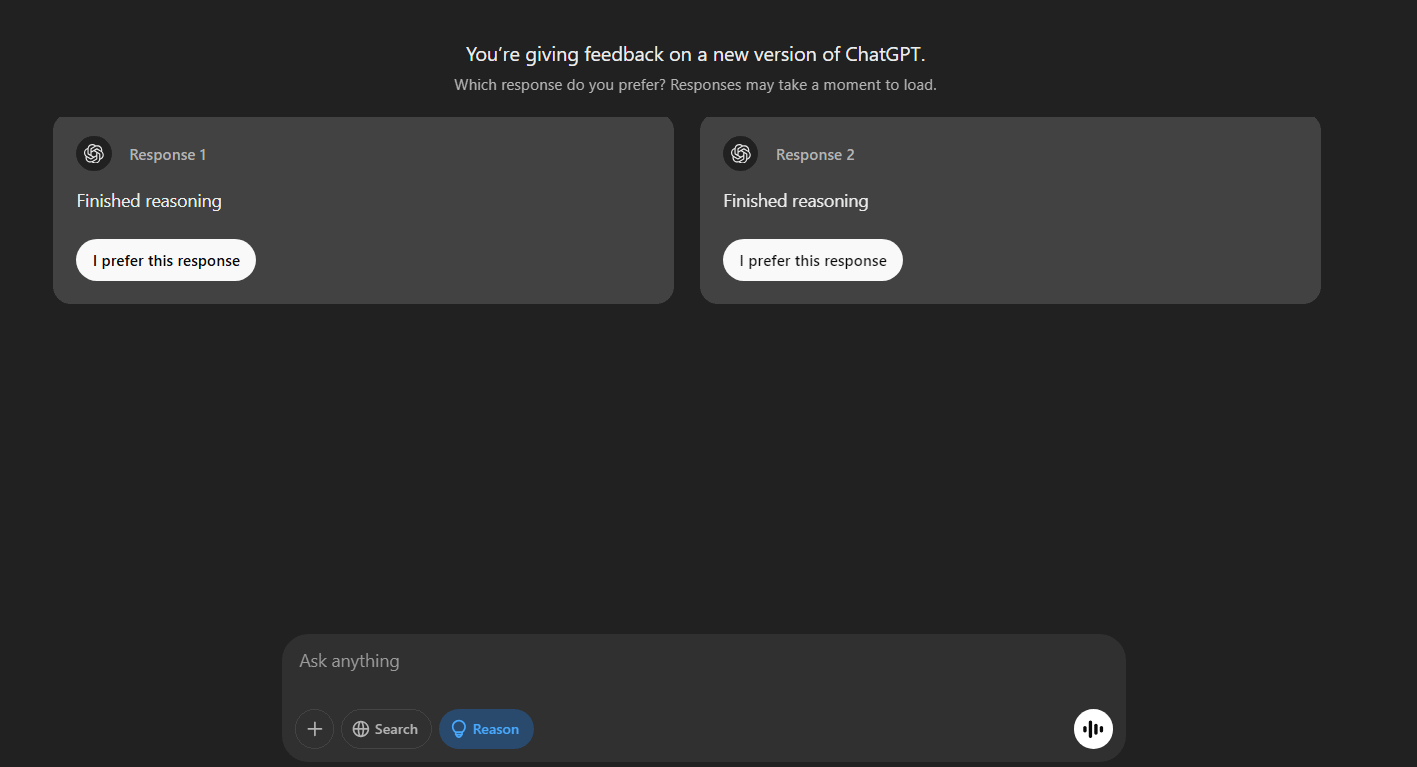

RLHF also continues after deployment. You've probably seen it in action on ChatGPT. ChatGPT sometimes shows you two different responses to the same prompt and asks which one is better.

Essentially, OpenAI crowdsources feedback from users to refine future versions of the model by updating the reward model or even retraining components of it.

This kind of RLHF helps adapt the model to how people actually use it rather than how researchers think it will be used.

Why RLHF Works

RLHF teaches the model what makes one answer better than the other in context.

It’s how models learn to:

Say “I don’t know” when they should

Be concise instead of rambling

Avoid giving dangerous or offensive advice

Ask clarifying questions when the prompt is vague

It aligns the model not just with the data, but with human preferences.

RLHF Is Not Perfect

Even with RLHF, models can:

Still hallucinate facts

Mirror human biases in the data

Fail to reason like a human would

But compared to raw pretrained models, RLHF-tuned models are much more usable and reliable.

At this point, you’ve seen how LLMs are pretrained, fine-tuned, and refined with human feedback. Now, let’s tie it all together with a simplified view of how models like ChatGPT are trained from start to finish:

Here’s a simplified lifecycle of how modern LLMs like ChatGPT are trained:

Pretraining – Learn language patterns from huge datasets

Supervised Fine-Tuning (SFT) – Learn good behavior from examples

RLHF – Learn human preferences by getting feedback and optimizing for it

Inference – Use the trained model to generate helpful responses

Together, these steps transform a raw language predictor into a digital assistant. What starts as a model that just predicts the next word becomes something that can follow instructions, answer questions, and even hold a conversation.

Thank you so much for reading!