#20: What If Waymo Worked Like ChatGPT? Would We All Be Safe?

Last month, Waymo released its latest safety impact report and shared that it has completed 96 million driverless miles. The report revealed something remarkable: Waymo’s vehicles are 91% less likely to be involved in crashes resulting in serious injury compared to an average human driver covering the same distance.

A recent Futurism article then prompted an interesting question:

“What if Waymo had worked like ChatGPT?”

Would we still be this safe?

That got me thinking about the contrast between self-driving car safety and AI safety.

The Nature of Two Kinds of AI

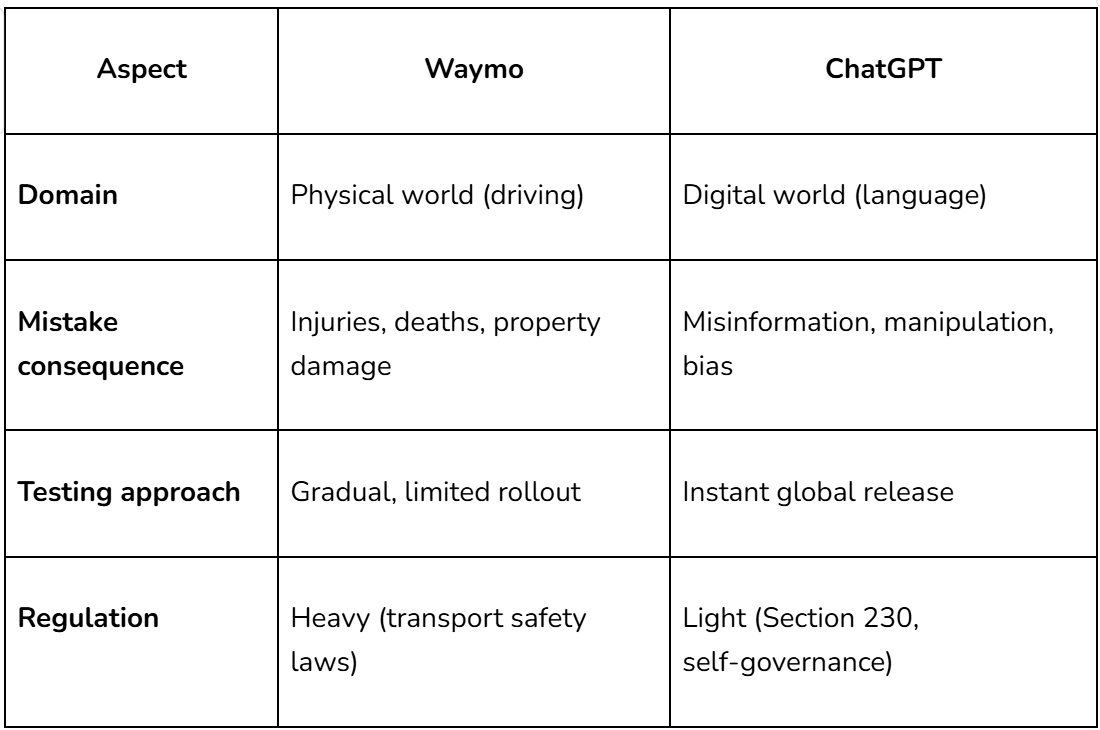

Both ChatGPT and Waymo are forms of AI decision-makers. They continuously observe, reason, and make judgements; just in different domains.

Both systems interact with humans and make judgment calls under uncertainty, yet one was forced to prioritize safety by design, while the other prioritized access and scale.

Why Waymo Is Safer

Waymo’s impressive record is the result of slow and rigorous iteration:

Over 20 million real-world miles and tens of billions of simulated miles before wide release

Still operating in only a handful of cities after 10 years

Subject to federal and state oversight, safety audits, and transparency reports

Safety wasn’t optional for Waymo. If a self-driving car makes one bad decision, regulators and the public react instantly. An early mishap can define the entire industry — just look at the difference between Waymo and Uber’s abandoned self-driving initiative or how GM’s cruise bet derailed.

Why LLMs Took the Opposite Route

Large Language Models (LLMs) like ChatGPT, Gemini, or Claude have taken the opposite path: release first, align later. There’s no equivalent of a federal “AI Driving Test,” no mandated safety mileage logs, and no real penalties for unsafe advice unless it causes demonstrable harm.

Yet these systems are increasingly trusted for:

Medical advice

Emotional support

Education

Financial decisions

Each of these carries real-world stakes. And in fact, there have already been more real-world casualties linked to unsafe LLM advice than to Waymo’s self-driving cars. There are tragic examples: from teenagers being encouraged toward self-harm to vulnerable users being misled during moments of crisis. Reddit and other forums are filled with disturbing stories that underscore one point: words can cause harm, too.

Regulation Shapes Culture

The contrast between Waymo and ChatGPT reveals something deeper: Regulation doesn’t just constrain innovation - it defines its culture.

Waymo operates in the legacy automobile sector, where “safety first” has been a century-old norm. ChatGPT, on the other hand, lives in the freewheeling internet ecosystem - built on a “move fast and break things” mentality.

If self-driving cars were launched under those same rules, we might have seen mass rollout years ago - but also mass casualties.

What Would a Safer ChatGPT Look Like?

Imagine a world where ChatGPT was rolled out like Waymo:

Trained and tested extensively before public release

Accessible only in limited regions or professions first

Subject to third-party safety reviews, simulations, and red-teaming

Legally required to demonstrate safety metrics, just as cars track “collisions per mile”

It would evolve more slowly - but perhaps the trust we place in it would be far deeper.

The Bigger Lesson

AI safety isn’t just about better models - it’s about better norms.

Waymo’s journey shows that when safety is treated as a prerequisite, innovation doesn’t slow down - it matures.

So maybe the real question isn’t whether ChatGPT should be safer, but how we define “safe” in a world of words, particularly when the words are seen as advice coming from a trusted confidant.

If cars have “crashes per million miles,” what’s the LLM equivalent?

How do we measure when advice, emotion, or influence goes off the road?

Until we can answer that, we’ll keep driving fast, but not necessarily safe.